The dust has settled on the December 2025 Core Update. If you operate in Finance, Health, or Legal sectors (YMYL) and saw your #1 rankings vanish, you likely didn’t have a quality issue. You had a format issue.

The winner wasn’t a competitor website. It was YouTube.

If you’re seeing your top rankings replaced by video carousels and AI Overviews, you haven’t been penalized; you’ve been reformatted. The era of text-only authority for “Your Money, Your Life” topics is over. Here is why it happened and the specific strategy Multimedia Entity Stacking, you need to implement to survive in 2026.

The Autopsy: It Wasn’t a Quality Issue, It Was a “Format” Re-weighting

For years, Google relied on text signals to judge authority. Backlinks, keyword density, and semantic relevance were the primary metrics. The December 2025 update fundamentally changed this by integrating the Gemini 2.0 model directly into the core ranking algorithm.

Gemini 2.0 doesn’t just read; it watches.

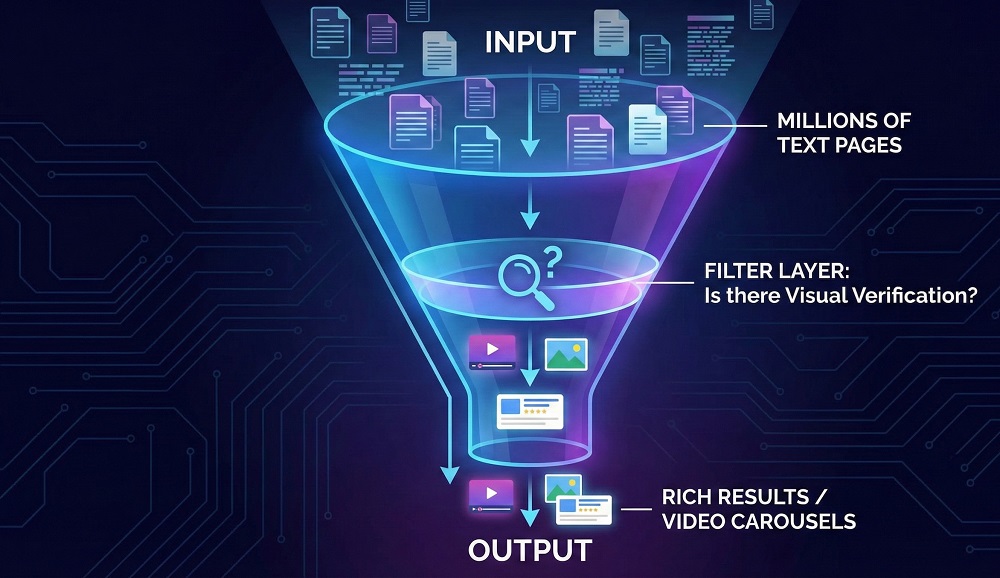

We are witnessing a Format Re-weighting Event. The “Helpful Content” signal now heavily weights “Demonstrated Experience.” For YMYL queries, Google no longer trusts text alone. Anyone can use an LLM to generate a 2,000 word financial guide. Text has become cheap.

Video, however, provides Proof of Life. It offers audio-visual verification that a real human with real expertise is behind the advice. If your site relies solely on text, you are now fighting with one hand tied behind your back.

Why “Helpful Content” Now Requires Visual Verification

The concept of E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) has evolved. The “Experience” component is now the most volatile variable.

Previously, you proved experience by writing “I have 10 years of experience.” Now, Google requires Visual Verification.

Gemini 2.0 parses video content frame-by-frame. It uses OCR (Optical Character Recognition) to read text on your screen and audio analysis to match your spoken words against the user’s query. It confirms that the entity (you) actually knows the topic, rather than just aggregating data.

If a user asks “how to fix a radiator,” a text guide is helpful. A video showing the hands-on repair is verified experience. Google’s index now filters out text-heavy pages in favor of those offering this multimodal proof.

The Solution: What is Multimedia Entity Stacking?

Stop treating video as a supplement. It is now the foundation of your architecture.

Multimedia Entity Stacking is a strategy where we fuse video and text into a single, verifiable entity. We do not just embed a YouTube video at the bottom of a post. We architect the blog post and the video to mirror each other perfectly, forcing Google to index them as one comprehensive resource.

Comparison: Old SEO vs. Multimedia Entity Stacking

| Feature | Old Text-Based SEO | Multimedia Entity Stacking |

| Primary Signal | Text relevance & Backlinks | Multimodal verification (Text + Audio + Visual) |

| Structure | H2s based on keyword volume | H2s synced with Video Chapters |

| Schema | Article Schema | Article + VideoObject (with Clips) |

| Indexing Goal | Featured Snippet (Text) | Visual Position 0 (Video Carousel) |

| Content Flow | Read top to bottom | Watch, Read, or Listen (User Choice) |

Execution Guide: Syncing H2s with Video Chapters

To execute this, you must reverse your workflow. Do not write the blog first. Plan the entity structure first.

1. The Mirror Method

Your video script and your blog outline must be identical. If your video has a chapter at 02:30 titled “The Risks of High-Yield Savings,” your blog post must have an H2 titled The Risks of High-Yield Savings.

This synchronicity signals to Gemini that the text passage is directly supported by a video segment.

2. The “Double-Dip” Ranking

When you sync these elements, you target two prime positions:

- The Text Snippet: For users who want to scan.

- The Key Moment: For users who want to watch.

By aligning the H2 with the Chapter, you allow Google to direct users to the exact second in the video that answers their query, directly from the SERP.

Technical Implementation: Scripting for the “Gemini Bot”

You are no longer just writing for humans; you are scripting for a bot that listens.

Scripting for Audio Parsing

Gemini 2.0 analyzes your transcript. You must speak your keywords clearly.

- Don’t say: “So, if you look at this thing here…”

- Do say: “If we analyze the Dec ’25 Core Update volatility, we see…”

Schema is the Glue

You must use VideoObject Schema. Specifically, you need to mark up the hasPart property to define your clips.

This code tells Google: “This specific 3-minute section of the video is the exact answer to the query targeted by this specific H2 in the blog.” Without this schema, your video is just a black box to the search engine.

Frequently Asked Questions

Why did my site traffic drop in December 2025?

The December 2025 Core Update shifted ranking priority toward multimodal content. Google’s Gemini 2.0 model now prioritizes pages that verify information through video evidence, audio transcripts, and visual demonstrations over purely text-based claims, especially in YMYL sectors.

What is Multimedia Entity Stacking?

Multimedia Entity Stacking is an SEO strategy where a creator synchronizes blog structure (H2s) with video timestamps. By binding them with VideoObject Schema, you create a unified “entity” that allows Google to index the content as both text and video, often securing the Visual Position 0.

How does Gemini 2.0 rank video content?

Gemini 2.0 natively “watches” video to verify E-E-A-T. It parses visual context (identifying objects), audio transcripts (matching spoken words to queries), and OCR (reading text on screen) to validate that the creator has actual hands-on experience with the topic.

What is the “Visual Position 0”?

Visual Position 0 refers to the Video Carousel or AI Overview video snippet. This spot now appears above standard blue links and is the primary click-driver for “How-to” and “Review” queries, replacing the traditional text-based Featured Snippet.