The next “user” visiting your website won’t be a human. It won’t care about your emotional brand story, your hero image, or your persuasive copywriting.

It will be an autonomous agent, tasked by a human to find a solution, verify pricing, and execute a transaction.

We are witnessing a fundamental shift in the mechanics of the internet. We are moving from optimizing for visibility (getting a human to click) to optimizing for utility (getting an AI agent to verify).

If your infrastructure isn’t ready, you aren’t just losing traffic. You are invisible to the economy’s new buyers. This is the era of Agentic SEO.

What is Agentic SEO? (And Why It Changes Everything)

Agentic SEO is the strategic practice of optimizing digital infrastructure and data provenance to be discoverable, verifiable, and actionable for autonomous AI agents.

Unlike traditional SEO, which targets human psychology and “dwell time,” Agentic SEO targets machine logic and “verification speed.”

The Shift: Persuasion vs. Verification

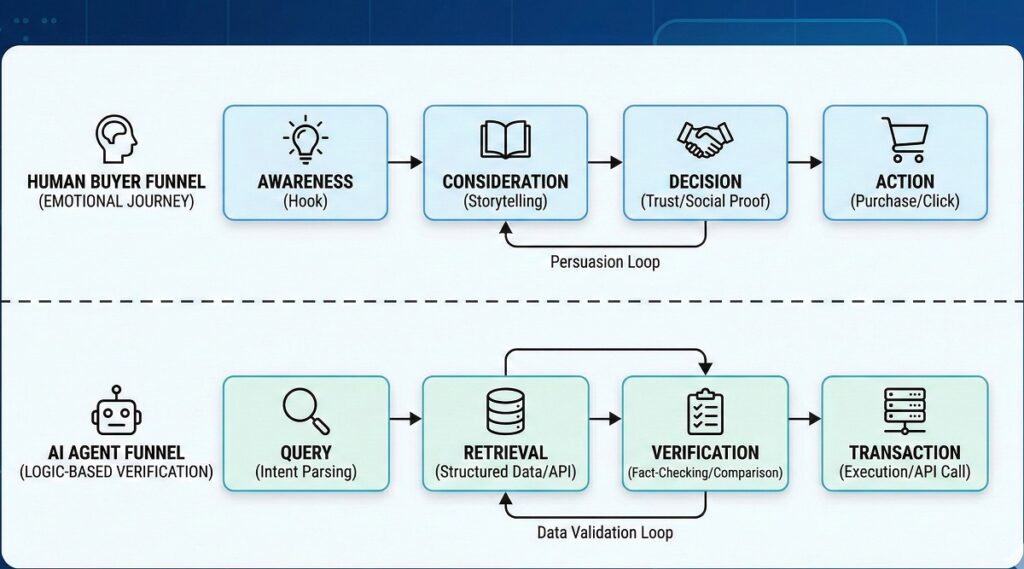

Humans buy based on emotion and justify with logic. Agents buy based on logic alone.

- Human SEO: Relies on “hooks,” storytelling, and visual appeal. The goal is a Click.

- Agentic SEO: Relies on structured data (

JSON-LD), API accessibility, and information density. The goal is a Citation or Transaction.

If your pricing is hidden behind a “Contact Us” form, a human might call you. An agent will simply mark your data as “incomplete” and move to a competitor with transparent, crawlable specs.

The Threat of “Perception Drift”

As Large Language Models (LLMs) become the primary interface for information, brands face a new, silent killer: Perception Drift.

Perception Drift occurs when an AI model’s understanding of your brand degrades or distorts over time.

Why It Happens

LLMs are probabilistic, not deterministic. They don’t “know” facts; they predict the next likely token based on their training data.

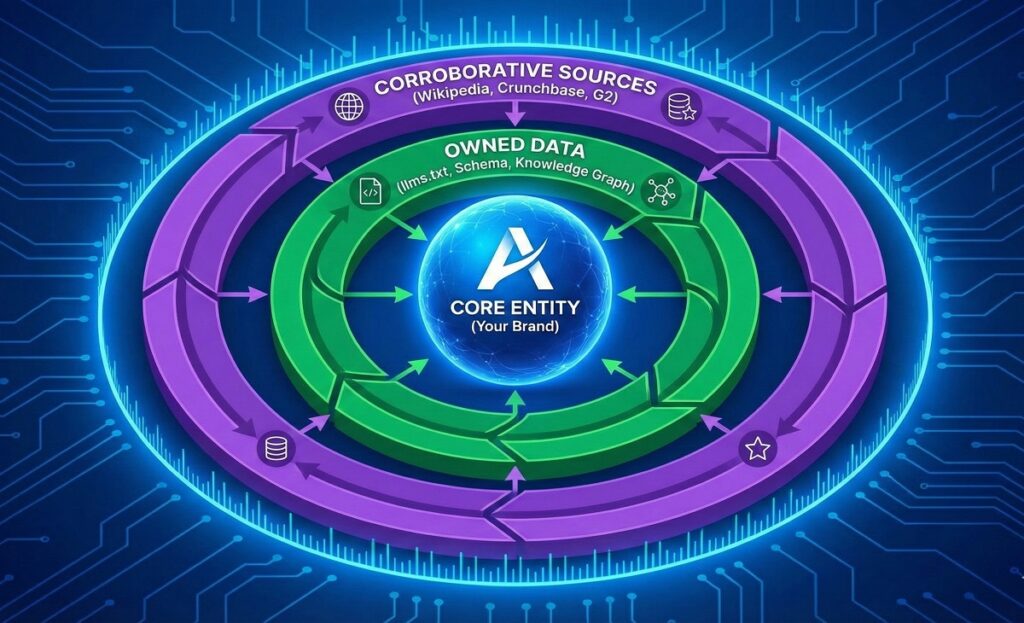

Without a constant, authoritative stream of structured data from you, the model’s weights regarding your brand will “drift.”

- The Symptom: ChatGPT confidently says your enterprise software is “free to start” (it’s not), or Perplexity categorizes your SaaS platform as a “consultancy firm.”

- The Cause: Weak entity signals. If you don’t define who you are in a language the machine understands (Code/Schema), the machine will hallucinate a definition for you.

You cannot fix this with better blog posts. You must fix it with better engineering.

The Agent-Ready Stack: Infrastructure Over Copywriting

To combat drift and capture agentic traffic, you must treat your content as a dataset.

1. llms.txt: The New robots.txt for AI

We used robots.txt to tell crawlers what not to index. We now use llms.txt to tell AI agents exactly what to read.

This text file acts as a curated map for AI scrapers. It should point directly to your most information-dense pages pricing, documentation, and technical specs bypassing marketing fluff. It reduces the “inference cost” for the agent, making your site a preferred source.

2. Advanced Schema (Nesting is Key)

Basic Schema markup (Organization, Article) is no longer enough. You need Nested Schema.

Don’t just tag a product. Nest the Offer inside the Product. Nest the PriceSpecification inside the Offer. Nest the ShippingDetails inside the Offer.

This creates a rigid “Knowledge Graph” that leaves zero room for an LLM to hallucinate your shipping costs or return policy.

3. The API “Front Door”

Autonomous agents struggle with complex HTML structures (pop-ups, heavy JavaScript).

Forward-thinking brands are building “Headless SEO” endpoints. These are lightweight, JSON-based versions of their key pages. When an agent requests data, you serve the JSON, not the HTML. It’s faster, cheaper for the bot to process, and 100% accurate.

Writing for Robots: Optimizing Content for Inference

Agents prefer Data Density. They are “token-efficient” buyers. Adjectives cost tokens but provide no value.

Your content strategy must pivot from “long-form guides” to “comparative utility.”

The New Editorial Standard

| Feature | Marketing Fluff (Human) | Agent-Ready Data (AI) |

| Pricing | “Contact us for a tailored quote.” | “$499/mo per seat, annual commit.” |

| Speed | “Blazing fast performance.” | “120ms average latency (p95).” |

| Comparison | “The market leader in innovation.” | Markdown Table: Us vs. Competitor A (Feature by Feature). |

| Structure | Long paragraphs, storytelling. | Bullet points, bolded entities, <table> tags. |

Stop burying the lead. Place the answer immediately after the heading. Use “hard data” (numbers, specs, version histories) to establish semantic authority.

Measuring Success in a Zero-Click World

How do you measure ROI when the agent doesn’t visit your site?

We must abandon “Sessions” and “Bounce Rate” as primary KPIs. Instead, focus on:

- Share of Model Voice: How often is your brand cited as the answer in Perplexity or SearchGPT?

- Referral Traffic Quality: Agent-referred traffic is lower volume but extremely high intent. They have already verified the specs; they are here to pay.

- Entity Sentiment: Using API tools to query LLMs and check if the “drift” is positive or negative.

FAQ: Agentic SEO & AEO Strategy

What is Agentic SEO?

Agentic SEO is the practice of optimizing web infrastructure for autonomous AI agents.

Unlike traditional SEO which targets human clicks, Agentic SEO focuses on data provenance, schema, and machine-readable formats to ensure AI agents can verify facts and transact without human intervention.

What is “Perception Drift” in AI?

Perception Drift is the degradation of an AI model’s accuracy regarding a specific brand.

It occurs when an LLM lacks authoritative, structured data updates, causing it to “hallucinate” incorrect pricing, features, or business models for a company based on outdated or diluted training weights.

What is the difference between SEO and AEO?

SEO optimizes for rankings; AEO optimizes for citations.

SEO (Search Engine Optimization) aims to place a link on a results page. AEO (Answer Engine Optimization) aims to be the single, direct answer generated by an AI, focusing on trust, accuracy, and formatting for “Featured Snippets.”

Why is llms.txt important?

llms.txt creates a clean, efficient path for AI scrapers to access your core data.

It allows site owners to curate exactly which pages an LLM should ingest, ensuring the model trains on high-quality, up-to-date information while ignoring low-value marketing pages.

How do I optimize for AI buying agents?

Focus on Verification, Transparency, and Structure.

Ungate your pricing (agents can’t fill forms). Use extensive JSON-LD Schema. Create comparison tables comparing your specs against competitors. Ensure your robots.txt and llms.txt allow access to these critical data points.